ESXI hebt Registrierung von Masschinen auf

nutze seit einiger Zeit für meine IT Projekte einen alten HP G7 Server mit ESXi 6.5 und einem Custom Image. Da die Kiste nur sporadisch läuft ist mir seit dem Letzen Start aufgefallen, dass die VMs die Registrierung verlieren.

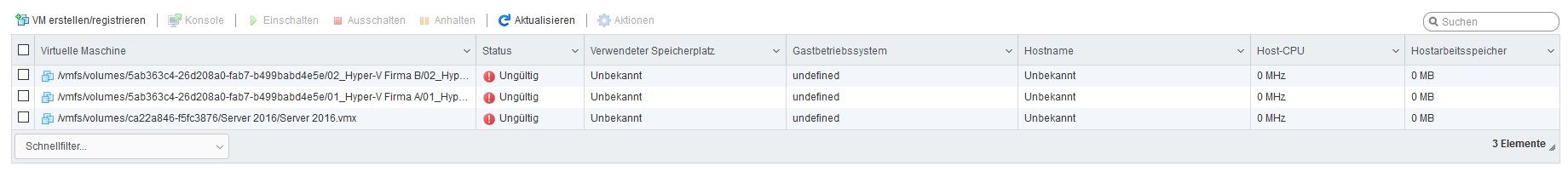

Also nach dem Start sind die VMs einfach nicht mehr registriert und tümpeln als Leichen in der Liste der virutellen Maschinen herum. Hebt man dann die Registrierung auf und registriert die Maschine als vorhandene neu funktinieren die auch problemlos, bis zum nächsten neustart dann sind sie wieder verweißt.

2 der 3 Maschinen sind Nested Hyper-V Hosts um 2 Standorte zu simulieren. Dachte zunächst, dass die Batterien vom Raidcontrollers leer sind aber das würde mir ha beim Booten angezeigt. HP Smart Array. Die Festplatten selbst sind auch soweit okay und melden keine Probleme.

Was mir auch aufgefallen ist, dass der Einstellungen garnicht mehr speichert. Virtuelle Switche, welche angelegt wurden, sind nach einem Neustart nicht mehr vorhanden. Um jetzt mal zu analysieren, woher das Promblem kommt (ESXi oder Serverhardware) frage ich euch mal. Habt Ihr mir vllt. nen Tipp oder ähnliches, wie ich die Mühle wieder ans laufen bekomme?

Viele Grüße

Content-ID: 441364

Url: https://administrator.de/forum/esxi-hebt-registrierung-von-masschinen-auf-441364.html

Ausgedruckt am: 24.07.2025 um 10:07 Uhr

- Kommentarübersicht - Bitte anmelden

- Internen Kommentar-Link kopieren

- Externen Kommentar-Link kopieren

- Zum Anfang der Kommentare

https://administrator.de/forum/esxi-hebt-registrierung-von-masschinen-auf-441364.html#comment-1360613

[content:441364#1360613]

was sagen die Logs, was laufen für Skripte? Ansonsten alles i.O? (Lizenzen und co).

Warum muss man hier die Fragenden eigentlich regelmäßig erstmal dazu auffordern selbst erstmal Vorarbeit zu leisten?

Viele Grüße

- Internen Kommentar-Link kopieren

- Externen Kommentar-Link kopieren

- Zum Anfang der Kommentare

https://administrator.de/forum/esxi-hebt-registrierung-von-masschinen-auf-441364.html#comment-1360616

[content:441364#1360616]

Hallo,

was sagen die Logs, was laufen für Skripte? Ansonsten alles i.O? (Lizenzen und co).

Warum muss man hier die Fragenden eigentlich regelmäßig erstmal dazu auffordern selbst erstmal Vorarbeit zu leisten?

Ansonsten sehe ich es genauso wie Du.

Viele Grüße

- Internen Kommentar-Link kopieren

- Externen Kommentar-Link kopieren

- Zum Anfang der Kommentare

https://administrator.de/forum/esxi-hebt-registrierung-von-masschinen-auf-441364.html#comment-1360618

[content:441364#1360618]

das hört sich so an, als wenn der betreffende Datastore nach dem Hochfahren nicht rechtzeitig online ist.

E.

- Internen Kommentar-Link kopieren

- Externen Kommentar-Link kopieren

- Zum Anfang der Kommentare

https://administrator.de/forum/esxi-hebt-registrierung-von-masschinen-auf-441364.html#comment-1360620

[content:441364#1360620]

Hi,

das hört sich so an, als wenn der betreffende Datastore nach dem Hochfahren nicht rechtzeitig online ist.

E.

- Internen Kommentar-Link kopieren

- Externen Kommentar-Link kopieren

- Zum Anfang der Kommentare

https://administrator.de/forum/esxi-hebt-registrierung-von-masschinen-auf-441364.html#comment-1360627

[content:441364#1360627]

- Internen Kommentar-Link kopieren

- Externen Kommentar-Link kopieren

- Zum Anfang der Kommentare

https://administrator.de/forum/esxi-hebt-registrierung-von-masschinen-auf-441364.html#comment-1360630

[content:441364#1360630]

Okay das stimmt. Die Logs liefere ich natürlich gleich nach. Bin schon im Wochenende oder noch nicht ganz auf der Höhe heute.

Dann fahr dich bitte hoch und stell die Frage dann nochmals ordentlich.

- Internen Kommentar-Link kopieren

- Externen Kommentar-Link kopieren

- Zum Anfang der Kommentare

https://administrator.de/forum/esxi-hebt-registrierung-von-masschinen-auf-441364.html#comment-1360643

[content:441364#1360643]

2019-04-17T14:12:11Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:11Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:11Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:11Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:11Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:11Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:11Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:11Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:12Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:12:12Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72297

2019-04-17T14:12:12Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:12Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:12Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:12Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:12:12Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72309

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:31Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:12:31Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72313

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:36Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:12:36Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72320

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:40Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:12:40Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72330

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:12:40Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:13:12Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:13:12Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:13:12Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:13:31Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:13:31Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72342

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:13:31Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:13:31Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72347

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:13:31Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:13:31Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72351

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:13:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:14:02Z backup.sh.72360: Locking esx.conf

2019-04-17T14:14:02Z backup.sh.72360: Creating archive

2019-04-17T14:14:03Z backup.sh.72360: Unlocking esx.conf

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:14:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:14:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:14:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:14:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:14:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:14:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:14:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:14:36Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:14:36Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72516

2019-04-17T14:14:36Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:14:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:14:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:14:36Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:14:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:14:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:14:36Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:14:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:14:36Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:14:57Z backup.sh.72561: Locking esx.conf

2019-04-17T14:14:57Z backup.sh.72561: Creating archive

2019-04-17T14:14:57Z backup.sh.72561: Unlocking esx.conf

2019-04-17T14:14:58Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:14:58Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:14:58Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:01Z crond[66699]: crond: USER root pid 72716 cmd /bin/hostd-probe.sh ++group=host/vim/vmvisor/hostd-probe/stats/sh

2019-04-17T14:15:01Z syslog[72719]: starting hostd probing.

2019-04-17T14:15:17Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:15:17Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72715

2019-04-17T14:15:17Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:17Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:17Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:17Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:25Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:15:25Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72742

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:25Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:31Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:15:31Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72752

2019-04-17T14:15:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:15:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:15:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:15:31Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:15:31Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72754

2019-04-17T14:15:38Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 1

2019-04-17T14:15:38Z lwsmd: [netlogon] DNS lookup for '_ldap._tcp.dc._msdcs.Testlab.test' failed with errno 0, h_errno = 1

2019-04-17T14:16:31Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:16:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:16:31Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:16:50Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:16:50Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72765

2019-04-17T14:16:50Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:16:50Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:16:50Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:16:50Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:16:50Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72768

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:06Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:17:06Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72778

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:06Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:07Z backup.sh.72785: Locking esx.conf

2019-04-17T14:17:07Z backup.sh.72785: Creating archive

2019-04-17T14:17:07Z backup.sh.72785: Unlocking esx.conf

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:07Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:17:07Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72937

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:07Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:07Z ImageConfigManager: 2019-04-17 14:17:07,975 [MainProcess INFO 'HostImage' MainThread] Installer <class 'vmware.esximage.Installer.BootBankInstaller.BootBankInstaller'> was not initiated - reason: altbootbank is invalid: Error in loading boot.cfg from bootbank /bootbank: Error parsing bootbank boot.cfg file /bootbank/boot.cfg: [Errno 2] No such file or directory: '/bootbank/boot.cfg'

2019-04-17T14:17:07Z ImageConfigManager: 2019-04-17 14:17:07,975 [MainProcess INFO 'HostImage' MainThread] Installers initiated are {'live': <vmware.esximage.Installer.LiveImageInstaller.LiveImageInstaller object at 0x9e55ecf9b0>}

2019-04-17T14:17:07Z hostd-icm[72951]: Registered 'ImageConfigManagerImpl:ha-image-config-manager'

2019-04-17T14:17:07Z ImageConfigManager: 2019-04-17 14:17:07,975 [MainProcess INFO 'root' MainThread] Starting CGI server on stdin/stdout

2019-04-17T14:17:07Z ImageConfigManager: 2019-04-17 14:17:07,976 [MainProcess DEBUG 'root' MainThread] b'<?xml version="1.0" encoding="UTF-8"?>\n<soapenv:Envelope xmlns:soapenc="http://schemas.xmlsoap.org/soap/encoding/"\n xmlns:soapenv="http://schemas.xmlsoap.org/soap/envelope/"\n xmlns:xsd="http://www.w3.org/2001/XMLSchema"\n xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">\n<soapenv:Header>\n<operationID xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="urn:vim25" versionId="6.5" xsi:type="xsd:string">esxui-d77d-c4a7</operationID><taskKey xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="urn:vim25" versionId="6.5" xsi:type="xsd:string">haTask--vim.host.ImageConfigManager.installDate-118488364</taskKey>\n</soapenv:Header>\n<soapenv:Body>\n<installDate xmlns="urn:vim25"><_this type="Host

2019-04-17T14:17:07Z ImageConfigManager: ImageConfigManager">ha-image-config-manager</_this></installDate>\n</soapenv:Body>\n</soapenv:Envelope>'

2019-04-17T14:17:08Z ImageConfigManager: 2019-04-17 14:17:08,090 [MainProcess DEBUG 'root' MainThread] <?xml version="1.0" encoding="UTF-8"?><soapenv:Envelope xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:soapenv="http://schemas.xmlsoap.org/soap/envelope/" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:soapenc="http://schemas.xmlsoap.org/soap/encoding/"> <soapenv:Body><installDateResponse xmlns='urn:vim25'><returnval>2018-01-31T20:00:52Z</returnval></installDateResponse></soapenv:Body></soapenv:Envelope>

2019-04-17T14:17:08Z ImageConfigManager: 2019-04-17 14:17:08,217 [MainProcess INFO 'HostImage' MainThread] Installer <class 'vmware.esximage.Installer.BootBankInstaller.BootBankInstaller'> was not initiated - reason: altbootbank is invalid: Error in loading boot.cfg from bootbank /bootbank: Error parsing bootbank boot.cfg file /bootbank/boot.cfg: [Errno 2] No such file or directory: '/bootbank/boot.cfg'

2019-04-17T14:17:08Z ImageConfigManager: 2019-04-17 14:17:08,218 [MainProcess INFO 'HostImage' MainThread] Installers initiated are {'live': <vmware.esximage.Installer.LiveImageInstaller.LiveImageInstaller object at 0x8bdccb4978>}

2019-04-17T14:17:08Z hostd-icm[72959]: Registered 'ImageConfigManagerImpl:ha-image-config-manager'

2019-04-17T14:17:08Z ImageConfigManager: 2019-04-17 14:17:08,218 [MainProcess INFO 'root' MainThread] Starting CGI server on stdin/stdout

2019-04-17T14:17:08Z ImageConfigManager: 2019-04-17 14:17:08,219 [MainProcess DEBUG 'root' MainThread] b'<?xml version="1.0" encoding="UTF-8"?>\n<soapenv:Envelope xmlns:soapenc="http://schemas.xmlsoap.org/soap/encoding/"\n xmlns:soapenv="http://schemas.xmlsoap.org/soap/envelope/"\n xmlns:xsd="http://www.w3.org/2001/XMLSchema"\n xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">\n<soapenv:Header>\n<operationID xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="urn:vim25" versionId="6.5" xsi:type="xsd:string">esxui-48da-c4b4</operationID><taskKey xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="urn:vim25" versionId="6.5" xsi:type="xsd:string">haTask--vim.host.ImageConfigManager.queryHostImageProfile-118488367</taskKey>\n</soapenv:Header>\n<soapenv:Body>\n<HostImageConfigGetProfile xmlns="urn:

2019-04-17T14:17:08Z ImageConfigManager: vim25"><_this type="HostImageConfigManager">ha-image-config-manager</_this></HostImageConfigGetProfile>\n</soapenv:Body>\n</soapenv:Envelope>'

2019-04-17T14:17:08Z ImageConfigManager: 2019-04-17 14:17:08,237 [MainProcess DEBUG 'root' MainThread] <?xml version="1.0" encoding="UTF-8"?><soapenv:Envelope xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:soapenc="http://schemas.xmlsoap.org/soap/encoding/" xmlns:soapenv="http://schemas.xmlsoap.org/soap/envelope/" xmlns:xsd="http://www.w3.org/2001/XMLSchema"> <soapenv:Body><HostImageConfigGetProfileResponse xmlns='urn:vim25'><returnval><name>(Updated) ESXICUST</name><vendor>Muffin's ESX Fix</vendor></returnval></HostImageConfigGetProfileResponse></soapenv:Body></soapenv:Envelope>

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:10Z lwsmd: [LwKrb5GetTgtImpl ../lwadvapi/threaded/krbtgt.c:262] KRB5 Error code: -1765328228 (Message: Cannot contact any KDC for realm 'TESTLAB.TEST')

2019-04-17T14:17:10Z lwsmd: [lsass] Failed to run provider specific request (request code = 14, provider = 'lsa-activedirectory-provider') -> error = 40121, symbol = LW_ERROR_DOMAIN_IS_OFFLINE, client pid = 72971

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'TESTLAB.TEST', site '<null>' with flags 100

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 100

2019-04-17T14:17:10Z lwsmd: [netlogon] Looking for a DC in domain 'Testlab.test', site '<null>' with flags 140

2019-04-17T14:17:15Z init: starting pid 72977, tty '': '/usr/lib/vmware/vmksummary/log-bootstop.sh stop'

2019-04-17T14:17:15Z addVob[72979]: DictionaryLoad: Cannot open file "/usr/lib/vmware/config": No such file or directory.

2019-04-17T14:17:15Z addVob[72979]: DictionaryLoad: Cannot open file "//.vmware/config": No such file or directory.

2019-04-17T14:17:15Z addVob[72979]: DictionaryLoad: Cannot open file "//.vmware/preferences": No such file or directory.

2019-04-17T14:17:15Z init: starting pid 72980, tty '': '/bin/shutdown.sh'

2019-04-17T14:17:15Z VMware[shutdown]: Stopping VMs

2019-04-17T14:17:15Z jumpstart[72996]: executing stop for daemon hp-ams.sh.

2019-04-17T14:17:15Z root: ams stop watchdog...

2019-04-17T14:17:15Z root: ams-wd: ams-watchdog stop.

2019-04-17T14:17:15Z root: Terminating ams-watchdog process with PID 73004 73005

2019-04-17T14:17:17Z root: ams stop service...

2019-04-17T14:17:20Z jumpstart[72996]: executing stop for daemon xorg.

2019-04-17T14:17:20Z jumpstart[72996]: Jumpstart failed to stop: xorg reason: Execution of command: /etc/init.d/xorg stop failed with status: 3

2019-04-17T14:17:20Z jumpstart[72996]: executing stop for daemon vmsyslogd.

2019-04-17T14:17:20Z jumpstart[72996]: Jumpstart failed to stop: vmsyslogd reason: Execution of command: /etc/init.d/vmsyslogd stop failed with status: 1

2019-04-17T14:17:20Z jumpstart[72996]: executing stop for daemon vmtoolsd.

2019-04-17T14:17:20Z jumpstart[72996]: Jumpstart failed to stop: vmtoolsd reason: Execution of command: /etc/init.d/vmtoolsd stop failed with status: 1

2019-04-17T14:17:20Z jumpstart[72996]: executing stop for daemon wsman.

2019-04-17T14:17:20Z openwsmand: Getting Exclusive access, please wait...

2019-04-17T14:17:20Z openwsmand: Exclusive access granted.

2019-04-17T14:17:20Z openwsmand: Stopping openwsmand

2019-04-17T14:17:20Z watchdog-openwsmand: Watchdog for openwsmand is now 68106

2019-04-17T14:17:20Z watchdog-openwsmand: Terminating watchdog process with PID 68106

2019-04-17T14:17:20Z watchdog-openwsmand: [68106] Signal received: exiting the watchdog

2019-04-17T14:17:21Z jumpstart[72996]: executing stop for daemon snmpd.

2019-04-17T14:17:21Z root: Stopping snmpd by administrative request

2019-04-17T14:17:21Z root: snmpd is not running.

2019-04-17T14:17:21Z jumpstart[72996]: executing stop for daemon sfcbd-watchdog.

2019-04-17T14:17:21Z sfcbd-init: Getting Exclusive access, please wait...

2019-04-17T14:17:21Z sfcbd-init: Exclusive access granted.

2019-04-17T14:17:21Z sfcbd-init: Request to stop sfcbd-watchdog, pid 73068

2019-04-17T14:17:21Z sfcbd-init: Invoked kill 68069

2019-04-17T14:17:21Z sfcb-vmware_raw[68596]: stopProcMICleanup: Cleanup t=1 not implemented for provider type: 8

2019-04-17T14:17:21Z sfcb-vmware_base[68588]: VICimProvider exiting on WFU cancelled.

2019-04-17T14:17:21Z sfcb-vmware_base[68588]: stopProcMICleanup: Cleanup t=1 not implemented for provider type: 8

2019-04-17T14:17:24Z sfcbd-init: stop sfcbd process completed.

2019-04-17T14:17:24Z jumpstart[72996]: executing stop for daemon vit_loader.sh.

2019-04-17T14:17:24Z VITLOADER: [etc/init.d/vit_loader] Shutdown VITD successfully

2019-04-17T14:17:24Z jumpstart[72996]: executing stop for daemon hpe-smx.init.

2019-04-17T14:17:25Z jumpstart[72996]: executing stop for daemon hpe-nmi.init.

2019-04-17T14:17:25Z jumpstart[72996]: executing stop for daemon hpe-fc.sh.

2019-04-17T14:17:25Z jumpstart[72996]: executing stop for daemon lwsmd.

2019-04-17T14:17:25Z watchdog-lwsmd: Watchdog for lwsmd is now 67833

2019-04-17T14:17:25Z watchdog-lwsmd: Terminating watchdog process with PID 67833

2019-04-17T14:17:25Z watchdog-lwsmd: [67833] Signal received: exiting the watchdog

2019-04-17T14:17:25Z lwsmd: Shutting down running services

2019-04-17T14:17:25Z lwsmd: Stopping service: lsass

2019-04-17T14:17:25Z lwsmd: [lsass-ipc] Shutting down listener

2019-04-17T14:17:25Z lwsmd: [lsass-ipc] Listener shut down

2019-04-17T14:17:25Z lwsmd: [lsass-ipc] Shutting down listener

2019-04-17T14:17:25Z lwsmd: [lsass-ipc] Listener shut down

2019-04-17T14:17:25Z lwsmd: [lsass] Machine Password Sync Thread stopping

2019-04-17T14:17:25Z lwsmd: [lsass] LSA Service exiting...

2019-04-17T14:17:25Z lwsmd: Stopping service: rdr

2019-04-17T14:17:25Z lwsmd: Stopping service: lwio

2019-04-17T14:17:25Z lwsmd: [lwio-ipc] Shutting down listener

2019-04-17T14:17:25Z lwsmd: [lwio-ipc] Listener shut down

2019-04-17T14:17:25Z lwsmd: [lwio] LWIO Service exiting...

2019-04-17T14:17:25Z lwsmd: Stopping service: netlogon

2019-04-17T14:17:25Z lwsmd: [netlogon-ipc] Shutting down listener

2019-04-17T14:17:25Z lwsmd: [netlogon-ipc] Listener shut down

2019-04-17T14:17:25Z lwsmd: [netlogon] LWNET Service exiting...

2019-04-17T14:17:25Z lwsmd: Stopping service: lwreg

2019-04-17T14:17:25Z lwsmd: [lwreg-ipc] Shutting down listener

2019-04-17T14:17:25Z lwsmd: [lwreg-ipc] Listener shut down

2019-04-17T14:17:25Z lwsmd: [lwreg] REG Service exiting...

2019-04-17T14:17:25Z lwsmd: [lwsm-ipc] Shutting down listener

2019-04-17T14:17:25Z lwsmd: [lwsm-ipc] Listener shut down

2019-04-17T14:17:25Z lwsmd: Logging stopped

2019-04-17T14:17:27Z jumpstart[72996]: executing stop for daemon vpxa.

2019-04-17T14:17:27Z watchdog-vpxa: Watchdog for vpxa is now 67792

2019-04-17T14:17:28Z watchdog-vpxa: Terminating watchdog process with PID 67792

2019-04-17T14:17:28Z watchdog-vpxa: [67792] Signal received: exiting the watchdog

2019-04-17T14:17:28Z jumpstart[72996]: executing stop for daemon vobd.

2019-04-17T14:17:28Z watchdog-vobd: Watchdog for vobd is now 65960

2019-04-17T14:17:28Z watchdog-vobd: Terminating watchdog process with PID 65960

2019-04-17T14:17:28Z watchdog-vobd: [65960] Signal received: exiting the watchdog

2019-04-17T14:17:28Z jumpstart[72996]: executing stop for daemon dcbd.

2019-04-17T14:17:28Z watchdog-dcbd: Watchdog for dcbd is now 67703

2019-04-17T14:17:28Z watchdog-dcbd: Terminating watchdog process with PID 67703

2019-04-17T14:17:28Z watchdog-dcbd: [67703] Signal received: exiting the watchdog

2019-04-17T14:17:28Z jumpstart[72996]: executing stop for daemon nscd.

2019-04-17T14:17:28Z watchdog-nscd: Watchdog for nscd is now 67721

2019-04-17T14:17:28Z watchdog-nscd: Terminating watchdog process with PID 67721

2019-04-17T14:17:28Z watchdog-nscd: [67721] Signal received: exiting the watchdog

2019-04-17T14:17:28Z jumpstart[72996]: executing stop for daemon cdp.

2019-04-17T14:17:28Z watchdog-cdp: Watchdog for cdp is now 67743

2019-04-17T14:17:28Z watchdog-cdp: Terminating watchdog process with PID 67743

2019-04-17T14:17:28Z watchdog-cdp: [67743] Signal received: exiting the watchdog

2019-04-17T14:17:28Z jumpstart[72996]: executing stop for daemon lacp.

2019-04-17T14:17:28Z watchdog-net-lacp: Watchdog for net-lacp is now 66333

2019-04-17T14:17:29Z watchdog-net-lacp: Terminating watchdog process with PID 66333

2019-04-17T14:17:29Z watchdog-net-lacp: [66333] Signal received: exiting the watchdog

2019-04-17T14:17:29Z jumpstart[72996]: executing stop for daemon smartd.

2019-04-17T14:17:29Z watchdog-smartd: Watchdog for smartd is now 67762

2019-04-17T14:17:29Z watchdog-smartd: Terminating watchdog process with PID 67762

2019-04-17T14:17:29Z watchdog-smartd: [67762] Signal received: exiting the watchdog

2019-04-17T14:17:29Z smartd: [warn] smartd received signal 15

2019-04-17T14:17:29Z smartd: [warn] smartd exit.

2019-04-17T14:17:29Z jumpstart[72996]: executing stop for daemon memscrubd.

2019-04-17T14:17:29Z jumpstart[72996]: Jumpstart failed to stop: memscrubd reason: Execution of command: /etc/init.d/memscrubd stop failed with status: 3

2019-04-17T14:17:29Z jumpstart[72996]: executing stop for daemon slpd.

2019-04-17T14:17:29Z root: slpd Stopping slpd

2019-04-17T14:17:29Z slpd[67695]: SLPD daemon shutting down

2019-04-17T14:17:29Z slpd[67695]: *** SLPD daemon shut down by administrative request

2019-04-17T14:17:29Z jumpstart[72996]: executing stop for daemon sensord.

2019-04-17T14:17:29Z watchdog-sensord: Watchdog for sensord is now 67094

2019-04-17T14:17:29Z watchdog-sensord: Terminating watchdog process with PID 67094

2019-04-17T14:17:29Z watchdog-sensord: [67094] Signal received: exiting the watchdog

2019-04-17T14:17:30Z jumpstart[72996]: executing stop for daemon storageRM.

2019-04-17T14:17:30Z watchdog-storageRM: Watchdog for storageRM is now 67114

2019-04-17T14:17:30Z watchdog-storageRM: Terminating watchdog process with PID 67114

2019-04-17T14:17:30Z watchdog-storageRM: [67114] Signal received: exiting the watchdog

2019-04-17T14:17:30Z jumpstart[72996]: executing stop for daemon hostd.

2019-04-17T14:17:30Z watchdog-hostd: Watchdog for hostd is now 67140

2019-04-17T14:17:30Z watchdog-hostd: Terminating watchdog process with PID 67140

2019-04-17T14:17:30Z watchdog-hostd: [67140] Signal received: exiting the watchdog

2019-04-17T14:17:30Z jumpstart[72996]: executing stop for daemon sdrsInjector.

2019-04-17T14:17:30Z watchdog-sdrsInjector: Watchdog for sdrsInjector is now 67159

2019-04-17T14:17:30Z watchdog-sdrsInjector: Terminating watchdog process with PID 67159

2019-04-17T14:17:30Z watchdog-sdrsInjector: [67159] Signal received: exiting the watchdog

2019-04-17T14:17:30Z jumpstart[72996]: executing stop for daemon nfcd.

2019-04-17T14:17:30Z jumpstart[72996]: executing stop for daemon vvold.

2019-04-17T14:17:31Z jumpstart[72996]: Jumpstart failed to stop: vvold reason: Execution of command: /etc/init.d/vvold stop failed with status: 3

2019-04-17T14:17:31Z jumpstart[72996]: executing stop for daemon rhttpproxy.

2019-04-17T14:17:31Z watchdog-rhttpproxy: Watchdog for rhttpproxy is now 67521

2019-04-17T14:17:31Z watchdog-rhttpproxy: Terminating watchdog process with PID 67521

2019-04-17T14:17:31Z watchdog-rhttpproxy: [67521] Signal received: exiting the watchdog

2019-04-17T14:17:31Z jumpstart[72996]: executing stop for daemon hostdCgiServer.

2019-04-17T14:17:31Z watchdog-hostdCgiServer: Watchdog for hostdCgiServer is now 67548

2019-04-17T14:17:31Z watchdog-hostdCgiServer: Terminating watchdog process with PID 67548

2019-04-17T14:17:31Z watchdog-hostdCgiServer: [67548] Signal received: exiting the watchdog

2019-04-17T14:17:31Z jumpstart[72996]: executing stop for daemon lbtd.

2019-04-17T14:17:31Z watchdog-net-lbt: Watchdog for net-lbt is now 67576

2019-04-17T14:17:31Z watchdog-net-lbt: Terminating watchdog process with PID 67576

2019-04-17T14:17:31Z watchdog-net-lbt: [67576] Signal received: exiting the watchdog

2019-04-17T14:17:31Z jumpstart[72996]: executing stop for daemon rabbitmqproxy.

2019-04-17T14:17:31Z jumpstart[72996]: executing stop for daemon vmfstraced.

2019-04-17T14:17:31Z watchdog-vmfstracegd: PID file /var/run/vmware/watchdog-vmfstracegd.PID does not exist

2019-04-17T14:17:31Z watchdog-vmfstracegd: Unable to terminate watchdog: No running watchdog process for vmfstracegd

2019-04-17T14:17:32Z vmfstracegd: Failed to clear vmfstracegd memory reservation

2019-04-17T14:17:32Z jumpstart[72996]: executing stop for daemon esxui.

2019-04-17T14:17:32Z jumpstart[72996]: executing stop for daemon iofilterd-vmwarevmcrypt.

2019-04-17T14:17:32Z iofilterd-vmwarevmcrypt[73649]: Could not expand environment variable HOME.

2019-04-17T14:17:32Z iofilterd-vmwarevmcrypt[73649]: Could not expand environment variable HOME.

2019-04-17T14:17:32Z iofilterd-vmwarevmcrypt[73649]: DictionaryLoad: Cannot open file "/usr/lib/vmware/config": No such file or directory.

2019-04-17T14:17:32Z iofilterd-vmwarevmcrypt[73649]: DictionaryLoad: Cannot open file "~/.vmware/config": No such file or directory.

2019-04-17T14:17:32Z iofilterd-vmwarevmcrypt[73649]: DictionaryLoad: Cannot open file "~/.vmware/preferences": No such file or directory.

2019-04-17T14:17:32Z iofilterd-vmwarevmcrypt[73649]: Resource Pool clean up for iofilter vmwarevmcrypt is done

2019-04-17T14:17:32Z jumpstart[72996]: executing stop for daemon swapobjd.

2019-04-17T14:17:32Z watchdog-swapobjd: Watchdog for swapobjd is now 67000

2019-04-17T14:17:32Z watchdog-swapobjd: Terminating watchdog process with PID 67000

2019-04-17T14:17:32Z watchdog-swapobjd: [67000] Signal received: exiting the watchdog

2019-04-17T14:17:33Z jumpstart[72996]: executing stop for daemon usbarbitrator.

2019-04-17T14:17:33Z watchdog-usbarbitrator: Watchdog for usbarbitrator is now 67038

2019-04-17T14:17:33Z watchdog-usbarbitrator: Terminating watchdog process with PID 67038

2019-04-17T14:17:33Z watchdog-usbarbitrator: [67038] Signal received: exiting the watchdog

2019-04-17T14:17:33Z jumpstart[72996]: executing stop for daemon iofilterd-spm.

2019-04-17T14:17:33Z iofilterd-spm[73712]: Could not expand environment variable HOME.

2019-04-17T14:17:33Z iofilterd-spm[73712]: Could not expand environment variable HOME.

2019-04-17T14:17:33Z iofilterd-spm[73712]: DictionaryLoad: Cannot open file "/usr/lib/vmware/config": No such file or directory.

2019-04-17T14:17:33Z iofilterd-spm[73712]: DictionaryLoad: Cannot open file "~/.vmware/config": No such file or directory.

2019-04-17T14:17:33Z iofilterd-spm[73712]: DictionaryLoad: Cannot open file "~/.vmware/preferences": No such file or directory.

2019-04-17T14:17:33Z iofilterd-spm[73712]: Resource Pool clean up for iofilter spm is done

2019-04-17T14:17:33Z jumpstart[72996]: executing stop for daemon ESXShell.

2019-04-17T14:17:33Z addVob[73719]: Could not expand environment variable HOME.

2019-04-17T14:17:33Z addVob[73719]: Could not expand environment variable HOME.

2019-04-17T14:17:33Z addVob[73719]: DictionaryLoad: Cannot open file "/usr/lib/vmware/config": No such file or directory.

2019-04-17T14:17:33Z addVob[73719]: DictionaryLoad: Cannot open file "~/.vmware/config": No such file or directory.

2019-04-17T14:17:33Z addVob[73719]: DictionaryLoad: Cannot open file "~/.vmware/preferences": No such file or directory.

2019-04-17T14:17:33Z addVob[73719]: VobUserLib_Init failed with -1

2019-04-17T14:17:33Z doat: Stopped wait on component ESXShell.stop

2019-04-17T14:17:33Z doat: Stopped wait on component ESXShell.disable

2019-04-17T14:17:33Z jumpstart[72996]: executing stop for daemon DCUI.

2019-04-17T14:17:33Z root: DCUI Disabling DCUI logins

2019-04-17T14:17:33Z addVob[73740]: Could not expand environment variable HOME.

2019-04-17T14:17:33Z addVob[73740]: Could not expand environment variable HOME.

2019-04-17T14:17:33Z addVob[73740]: DictionaryLoad: Cannot open file "/usr/lib/vmware/config": No such file or directory.

2019-04-17T14:17:33Z addVob[73740]: DictionaryLoad: Cannot open file "~/.vmware/config": No such file or directory.

2019-04-17T14:17:33Z addVob[73740]: DictionaryLoad: Cannot open file "~/.vmware/preferences": No such file or directory.

2019-04-17T14:17:33Z addVob[73740]: VobUserLib_Init failed with -1

2019-04-17T14:17:33Z jumpstart[72996]: executing stop for daemon ntpd.

2019-04-17T14:17:33Z root: ntpd Stopping ntpd

2019-04-17T14:17:34Z watchdog-ntpd: Watchdog for ntpd is now 66913

2019-04-17T14:17:34Z watchdog-ntpd: Terminating watchdog process with PID 66913

2019-04-17T14:17:34Z watchdog-ntpd: [66913] Signal received: exiting the watchdog

2019-04-17T14:17:34Z ntpd[66923]: ntpd exiting on signal 1 (Hangup)

2019-04-17T14:17:34Z ntpd[66923]: 185.194.140.199 local addr 192.168.20.20 -> <null>

2019-04-17T14:17:34Z jumpstart[72996]: executing stop for daemon SSH.

2019-04-17T14:17:34Z addVob[73772]: Could not expand environment variable HOME.

2019-04-17T14:17:34Z addVob[73772]: Could not expand environment variable HOME.

2019-04-17T14:17:34Z addVob[73772]: DictionaryLoad: Cannot open file "/usr/lib/vmware/config": No such file or directory.

2019-04-17T14:17:34Z addVob[73772]: DictionaryLoad: Cannot open file "~/.vmware/config": No such file or directory.

2019-04-17T14:17:34Z addVob[73772]: DictionaryLoad: Cannot open file "~/.vmware/preferences": No such file or directory.

2019-04-17T14:17:34Z addVob[73772]: VobUserLib_Init failed with -1

2019-04-17T14:17:34Z doat: Stopped wait on component RemoteShell.disable

2019-04-17T14:17:34Z doat: Stopped wait on component RemoteShell.stop

2019-04-17T14:17:35Z backup.sh.73828: Locking esx.conf

2019-04-17T14:17:35Z backup.sh.73828: Creating archive

2019-04-17T14:17:35Z backup.sh.73828: Unlocking esx.conf

2019-04-18T06:47:42Z watchdog-vobd: [65960] Begin '/usr/lib/vmware/vob/bin/vobd', min-uptime = 60, max-quick-failures = 5, max-total-failures = 1000000, bg_pid_file = '', reboot-flag = '0'

2019-04-18T06:47:42Z watchdog-vobd: Executing '/usr/lib/vmware/vob/bin/vobd'

2019-04-18T06:47:42Z jumpstart[65945]: Launching Executor

2019-04-18T06:47:42Z jumpstart[65945]: Setting up Executor - Reset Requested

2019-04-18T06:47:43Z jumpstart[65945]: ignoring plugin 'vsan-upgrade' because version '2.0.0' has already been run.

2019-04-18T06:47:43Z jumpstart[65945]: executing start plugin: check-required-memory

2019-04-18T06:47:43Z jumpstart[65945]: executing start plugin: restore-configuration

2019-04-18T06:47:43Z jumpstart[65991]: restoring configuration

2019-04-18T06:47:43Z jumpstart[65991]: extracting from file /local.tgz

2019-04-18T06:47:43Z jumpstart[65991]: file etc/likewise/db/registry.db has been changed before restoring the configuration - the changes will be lost

2019-04-18T06:47:43Z jumpstart[65991]: ConfigCheck: Running ipv6 option upgrade, redundantly

2019-04-18T06:47:43Z jumpstart[65991]: Util: tcpip4 IPv6 enabled

2019-04-18T06:47:43Z jumpstart[65945]: executing start plugin: vmkeventd

2019-04-18T06:47:43Z watchdog-vmkeventd: [65993] Begin '/usr/lib/vmware/vmkeventd/bin/vmkeventd', min-uptime = 10, max-quick-failures = 5, max-total-failures = 9999999, bg_pid_file = '', reboot-flag = '0'

2019-04-18T06:47:43Z watchdog-vmkeventd: Executing '/usr/lib/vmware/vmkeventd/bin/vmkeventd'

2019-04-18T06:47:43Z jumpstart[65945]: executing start plugin: vmkcrypto

2019-04-18T06:47:43Z jumpstart[65970]: 65971:VVOLLIB : VVolLib_GetSoapContext:379: Using 30 secs for soap connect timeout.

2019-04-18T06:47:43Z jumpstart[65970]: 65971:VVOLLIB : VVolLib_GetSoapContext:380: Using 200 secs for soap receive timeout.

2019-04-18T06:47:43Z jumpstart[65970]: 65971:VVOLLIB : VVolLibTracingInit:89: Successfully initialized the VVolLib tracing module

2019-04-18T06:47:43Z jumpstart[65945]: executing start plugin: autodeploy-enabled

2019-04-18T06:47:43Z jumpstart[65945]: executing start plugin: vsan-base

2019-04-18T06:47:43Z jumpstart[65945]: executing start plugin: vsan-early

2019-04-18T06:47:43Z jumpstart[65945]: executing start plugin: advanced-user-configuration-options

2019-04-18T06:47:43Z jumpstart[65945]: executing start plugin: restore-advanced-configuration

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: PSA-boot-config

2019-04-18T06:47:44Z jumpstart[65970]: DictionaryLoad: Cannot open file "/usr/lib/vmware/config": No such file or directory.

2019-04-18T06:47:44Z jumpstart[65970]: DictionaryLoad: Cannot open file "//.vmware/config": No such file or directory.

2019-04-18T06:47:44Z jumpstart[65970]: DictionaryLoad: Cannot open file "//.vmware/preferences": No such file or directory.

2019-04-18T06:47:44Z jumpstart[65970]: lib/ssl: OpenSSL using FIPS_drbg for RAND

2019-04-18T06:47:44Z jumpstart[65970]: lib/ssl: protocol list tls1.2

2019-04-18T06:47:44Z jumpstart[65970]: lib/ssl: protocol list tls1.2 (openssl flags 0x17000000)

2019-04-18T06:47:44Z jumpstart[65970]: lib/ssl: cipher list !aNULL:kECDH+AESGCM:ECDH+AESGCM:RSA+AESGCM:kECDH+AES:ECDH+AES:RSA+AES

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: vprobe

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: vmkapi-mgmt

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: dma-engine

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: procfs

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: mgmt-vmkapi-compatibility

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: iodm

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: vmkernel-vmkapi-compatibility

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: driver-status-check

2019-04-18T06:47:44Z jumpstart[66023]: driver_status_check: boot cmdline: /jumpstrt.gz vmbTrustedBoot=false tboot=0x101b000 installerDiskDumpSlotSize=2560 no-auto-partition bootUUID=e78269d0448c41fe200c24e8a54f93c1

2019-04-18T06:47:44Z jumpstart[66023]: driver_status_check: useropts:

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: hardware-config

2019-04-18T06:47:44Z jumpstart[66024]: Failed to symlink /etc/vmware/pci.ids: No such file or directory

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: vmklinux

2019-04-18T06:47:44Z jumpstart[65945]: executing start plugin: vmkdevmgr

2019-04-18T06:47:44Z jumpstart[66025]: Starting vmkdevmgr

2019-04-18T06:47:50Z jumpstart[65945]: executing start plugin: register-vmw-mpp

2019-04-18T06:47:50Z jumpstart[65945]: executing start plugin: register-vmw-satp

2019-04-18T06:47:50Z jumpstart[65945]: executing start plugin: register-vmw-psp

2019-04-18T06:47:50Z jumpstart[65945]: executing start plugin: etherswitch

2019-04-18T06:47:50Z jumpstart[65945]: executing start plugin: aslr

2019-04-18T06:47:50Z jumpstart[65945]: executing start plugin: random

2019-04-18T06:47:50Z jumpstart[65945]: executing start plugin: storage-early-config-dev-settings

2019-04-18T06:47:50Z jumpstart[65945]: executing start plugin: networking-drivers

2019-04-18T06:47:50Z jumpstart[66145]: Loading network device drivers

2019-04-18T06:47:53Z jumpstart[66145]: LoadVmklinuxDriver: Loaded module bnx2

2019-04-18T06:47:54Z jumpstart[65945]: executing start plugin: register-vmw-vaai

2019-04-18T06:47:54Z jumpstart[65945]: executing start plugin: usb

2019-04-18T06:47:54Z jumpstart[65945]: executing start plugin: local-storage

2019-04-18T06:47:54Z jumpstart[65945]: executing start plugin: psa-mask-paths

2019-04-18T06:47:54Z jumpstart[65945]: executing start plugin: network-uplink-init

2019-04-18T06:47:54Z jumpstart[66226]: Trying to connect...

2019-04-18T06:47:54Z jumpstart[66226]: Connected.

2019-04-18T06:47:57Z jumpstart[66226]: Received processed

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: psa-nmp-pre-claim-config

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: psa-filter-pre-claim-config

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: restore-system-uuid

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: restore-storage-multipathing

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: network-support

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: psa-load-rules

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: vds-vmkapi-compatibility

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: psa-filter-post-claim-config

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: psa-nmp-post-claim-config

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: mlx4_en

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: dvfilters-vmkapi-compatibility

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: vds-config

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: storage-drivers

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: vxlan-base

2019-04-18T06:47:57Z jumpstart[65945]: executing start plugin: firewall

2019-04-18T06:47:58Z jumpstart[65945]: executing start plugin: dvfilter-config

2019-04-18T06:47:58Z jumpstart[65945]: executing start plugin: dvfilter-generic-fastpath

2019-04-18T06:47:58Z jumpstart[65945]: executing start plugin: lacp-daemon

2019-04-18T06:47:58Z watchdog-net-lacp: [66328] Begin '/usr/sbin/net-lacp', min-uptime = 1000, max-quick-failures = 100, max-total-failures = 100, bg_pid_file = '', reboot-flag = '0'

2019-04-18T06:47:58Z watchdog-net-lacp: Executing '/usr/sbin/net-lacp'

2019-04-18T06:47:58Z jumpstart[65945]: executing start plugin: storage-psa-init

2019-04-18T06:47:58Z jumpstart[66345]: Trying to connect...

2019-04-18T06:47:58Z jumpstart[66345]: Connected.

2019-04-18T06:47:58Z jumpstart[66345]: Received processed

2019-04-18T06:47:58Z jumpstart[65945]: executing start plugin: restore-networking

2019-04-18T06:47:59Z jumpstart[65970]: NetworkInfoImpl: Enabling 1 netstack instances during boot

2019-04-18T06:48:04Z jumpstart[65970]: VmKernelNicInfo::LoadConfig: Storing previous management interface:'vmk0'

2019-04-18T06:48:04Z jumpstart[65970]: VmKernelNicInfo::LoadConfig: Processing migration for'vmk0'

2019-04-18T06:48:04Z jumpstart[65970]: VmKernelNicInfo::LoadConfig: Processing migration for'vmk1'

2019-04-18T06:48:04Z jumpstart[65970]: VmKernelNicInfo::LoadConfig: Processing config for'vmk0'

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: GetManagementInterface: Tagging vmk0 as Management

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: SetTaggedManagementInterface: Writing vmk0 to the ManagementIface node

2019-04-18T06:48:04Z jumpstart[65970]: VmkNic::SetIpConfigInternal: IPv4 address set up successfully on vmknic vmk0

2019-04-18T06:48:04Z jumpstart[65970]: VmkNic: Ipv6 not Enabled

2019-04-18T06:48:04Z jumpstart[65970]: VmkNic::SetIpConfigInternal: IPv6 address set up successfully on vmknic vmk0

2019-04-18T06:48:04Z jumpstart[65970]: RoutingInfo: LoadConfig called on RoutingInfo

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: GetManagementInterface: Tagging vmk0 as Management

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: SetTaggedManagementInterface: Writing vmk0 to the ManagementIface node

2019-04-18T06:48:04Z jumpstart[65970]: VmkNic::Enable: netstack:'defaultTcpipStack', interface:'vmk0', portStr:'Management Network'

2019-04-18T06:48:04Z jumpstart[65970]: VmKernelNicInfo::LoadConfig: Processing config for'vmk1'

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: GetManagementInterface: Tagging vmk0 as Management

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: SetTaggedManagementInterface: Writing vmk0 to the ManagementIface node

2019-04-18T06:48:04Z jumpstart[65970]: VmkNic::SetIpConfigInternal: IPv4 address set up successfully on vmknic vmk1

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: GetManagementInterface: Tagging vmk0 as Management

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: SetTaggedManagementInterface: Writing vmk0 to the ManagementIface node

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: VmkNic::SetIpConfigInternal: IPv6 address set up successfully on vmknic vmk1

2019-04-18T06:48:04Z jumpstart[65970]: RoutingInfo: LoadConfig called on RoutingInfo

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: GetManagementInterface: Tagging vmk0 as Management

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: SetTaggedManagementInterface: Writing vmk0 to the ManagementIface node

2019-04-18T06:48:04Z jumpstart[65970]: VmkNic::Enable: netstack:'defaultTcpipStack', interface:'vmk1', portStr:'NFS-FreeNAS'

2019-04-18T06:48:04Z jumpstart[65970]: RoutingInfo: LoadConfig called on RoutingInfo

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: GetManagementInterface: Tagging vmk0 as Management

2019-04-18T06:48:04Z jumpstart[65970]: VmkNicImpl::IsDhclientProcess: No valid dhclient pid found

2019-04-18T06:48:04Z jumpstart[65970]: 2019-04-18T06:48:04Z jumpstart[65970]: SetTaggedManagementInterface: Writing vmk0 to the ManagementIface node

2019-04-18T06:48:04Z jumpstart[65945]: executing start plugin: random-seed

2019-04-18T06:48:04Z jumpstart[65945]: executing start plugin: dvfilters

2019-04-18T06:48:04Z jumpstart[65945]: executing start plugin: restore-pxe-marker

2019-04-18T06:48:04Z jumpstart[65945]: executing start plugin: auto-configure-networking

2019-04-18T06:48:04Z jumpstart[65945]: executing start plugin: storage-early-configuration

2019-04-18T06:48:04Z jumpstart[66412]: 66412:VVOLLIB : VVolLib_GetSoapContext:379: Using 30 secs for soap connect timeout.

2019-04-18T06:48:04Z jumpstart[66412]: 66412:VVOLLIB : VVolLib_GetSoapContext:380: Using 200 secs for soap receive timeout.

2019-04-18T06:48:04Z jumpstart[66412]: 66412:VVOLLIB : VVolLibTracingInit:89: Successfully initialized the VVolLib tracing module

2019-04-18T06:48:04Z jumpstart[66412]: DictionaryLoad: Cannot open file "/usr/lib/vmware/config": No such file or directory.

2019-04-18T06:48:04Z jumpstart[66412]: DictionaryLoad: Cannot open file "//.vmware/config": No such file or directory.

2019-04-18T06:48:04Z jumpstart[66412]: DictionaryLoad: Cannot open file "//.vmware/preferences": No such file or directory.

2019-04-18T06:48:04Z jumpstart[66412]: lib/ssl: OpenSSL using FIPS_drbg for RAND

2019-04-18T06:48:04Z jumpstart[66412]: lib/ssl: protocol list tls1.2

2019-04-18T06:48:04Z jumpstart[66412]: lib/ssl: protocol list tls1.2 (openssl flags 0x17000000)

2019-04-18T06:48:04Z jumpstart[66412]: lib/ssl: cipher list !aNULL:kECDH+AESGCM:ECDH+AESGCM:RSA+AESGCM:kECDH+AES:ECDH+AES:RSA+AES

2019-04-18T06:48:04Z jumpstart[65945]: executing start plugin: bnx2fc

2019-04-18T06:48:04Z jumpstart[65945]: executing start plugin: software-iscsi

2019-04-18T06:48:04Z jumpstart[65970]: iScsi: No iBFT data present in the BIOS

2019-04-18T06:48:05Z iscsid: Notice: iSCSI Database already at latest schema. (Upgrade Skipped).

2019-04-18T06:48:05Z iscsid: iSCSI MASTER Database opened. (0x21e8008)

2019-04-18T06:48:05Z iscsid: LogLevel = 0

2019-04-18T06:48:05Z iscsid: LogSync = 0

2019-04-18T06:48:05Z iscsid: memory (180) MB successfully reserved for 1024 sessions

2019-04-18T06:48:05Z iscsid: allocated transportCache for transport (bnx2i-b499babd4e64) idx (0) size (460808)

2019-04-18T06:48:05Z iscsid: allocated transportCache for transport (bnx2i-b499babd4e62) idx (1) size (460808)

2019-04-18T06:48:05Z iscsid: allocated transportCache for transport (bnx2i-b499babd4e60) idx (2) size (460808)

2019-04-18T06:48:05Z iscsid: allocated transportCache for transport (bnx2i-b499babd4e5e) idx (3) size (460808)

2019-04-18T06:48:06Z iscsid: DISCOVERY: transport_name=bnx2i-b499babd4e5e Pending=0 Failed=0

2019-04-18T06:48:06Z iscsid: DISCOVERY: transport_name=bnx2i-b499babd4e5e Pending=0 Failed=0

2019-04-18T06:48:06Z iscsid: DISCOVERY: transport_name=bnx2i-b499babd4e60 Pending=0 Failed=0

2019-04-18T06:48:06Z iscsid: DISCOVERY: transport_name=bnx2i-b499babd4e60 Pending=0 Failed=0

2019-04-18T06:48:06Z iscsid: DISCOVERY: transport_name=bnx2i-b499babd4e62 Pending=0 Failed=0

2019-04-18T06:48:06Z iscsid: DISCOVERY: transport_name=bnx2i-b499babd4e62 Pending=0 Failed=0

2019-04-18T06:48:06Z iscsid: DISCOVERY: transport_name=bnx2i-b499babd4e64 Pending=0 Failed=0

2019-04-18T06:48:06Z iscsid: DISCOVERY: transport_name=bnx2i-b499babd4e64 Pending=0 Failed=0

2019-04-18T06:48:06Z jumpstart[65945]: executing start plugin: fcoe-config

2019-04-18T06:48:06Z jumpstart[65945]: executing start plugin: storage-path-claim

2019-04-18T06:48:10Z jumpstart[65970]: StorageInfo: Number of paths 3

2019-04-18T06:48:14Z jumpstart[65970]: StorageInfo: Number of devices 3

2019-04-18T06:48:14Z jumpstart[65970]: StorageInfo: Unable to name LUN mpx.vmhba0:C0:T0:L0: Cannot set display name on this device. Unable to guarantee name will not change across reboots or media change.

2019-04-18T06:50:20Z mark: storage-path-claim-completed

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: gss

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: mount-filesystems

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: restore-paths

2019-04-18T06:48:14Z jumpstart[65970]: StorageInfo: Unable to name LUN mpx.vmhba0:C0:T0:L0: Cannot set display name on this device. Unable to guarantee name will not change across reboots or media change.

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: filesystem-drivers

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: rpc

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: dump-partition

2019-04-18T06:48:14Z jumpstart[65970]: execution of 'system coredump partition set --enable=true --smart' failed : Unable to smart activate a dump partition. Error was: No suitable diagnostic partitions found..

2019-04-18T06:48:14Z jumpstart[65970]: 2019-04-18T06:48:14Z jumpstart[65945]: Executor failed executing esxcli command system coredump partition set --enable=true --smart

2019-04-18T06:48:14Z jumpstart[65945]: Method invocation failed: dump-partition->start() failed: error while executing the cli

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: vsan-devel

2019-04-18T06:48:14Z jumpstart[66433]: VsanDevel: DevelBootDelay: 0

2019-04-18T06:48:14Z jumpstart[66433]: VsanDevel: DevelWipeConfigOnBoot: 0

2019-04-18T06:48:14Z jumpstart[66433]: VsanDevel: DevelTagSSD: Starting

2019-04-18T06:48:14Z jumpstart[66433]: 66433:VVOLLIB : VVolLib_GetSoapContext:379: Using 30 secs for soap connect timeout.

2019-04-18T06:48:14Z jumpstart[66433]: 66433:VVOLLIB : VVolLib_GetSoapContext:380: Using 200 secs for soap receive timeout.

2019-04-18T06:48:14Z jumpstart[66433]: 66433:VVOLLIB : VVolLibTracingInit:89: Successfully initialized the VVolLib tracing module

2019-04-18T06:48:14Z jumpstart[66433]: DictionaryLoad: Cannot open file "/usr/lib/vmware/config": No such file or directory.

2019-04-18T06:48:14Z jumpstart[66433]: DictionaryLoad: Cannot open file "//.vmware/config": No such file or directory.

2019-04-18T06:48:14Z jumpstart[66433]: DictionaryLoad: Cannot open file "//.vmware/preferences": No such file or directory.

2019-04-18T06:48:14Z jumpstart[66433]: lib/ssl: OpenSSL using FIPS_drbg for RAND

2019-04-18T06:48:14Z jumpstart[66433]: lib/ssl: protocol list tls1.2

2019-04-18T06:48:14Z jumpstart[66433]: lib/ssl: protocol list tls1.2 (openssl flags 0x17000000)

2019-04-18T06:48:14Z jumpstart[66433]: lib/ssl: cipher list !aNULL:kECDH+AESGCM:ECDH+AESGCM:RSA+AESGCM:kECDH+AES:ECDH+AES:RSA+AES

2019-04-18T06:48:14Z jumpstart[66433]: VsanDevel: DevelTagSSD: Done.

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: vmfs

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: ufs

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: vfat

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: nfsgssd

2019-04-18T06:48:14Z watchdog-nfsgssd: [66636] Begin '/usr/lib/vmware/nfs/bin/nfsgssd -f -a', min-uptime = 60, max-quick-failures = 128, max-total-failures = 65536, bg_pid_file = '', reboot-flag = '0'

2019-04-18T06:48:14Z watchdog-nfsgssd: Executing '/usr/lib/vmware/nfs/bin/nfsgssd -f -a'

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: vsan

2019-04-18T06:48:14Z nfsgssd[66646]: Could not expand environment variable HOME.

2019-04-18T06:48:14Z nfsgssd[66646]: Could not expand environment variable HOME.

2019-04-18T06:48:14Z nfsgssd[66646]: DictionaryLoad: Cannot open file "/usr/lib/vmware/config": No such file or directory.

2019-04-18T06:48:14Z nfsgssd[66646]: DictionaryLoad: Cannot open file "~/.vmware/config": No such file or directory.

2019-04-18T06:48:14Z nfsgssd[66646]: DictionaryLoad: Cannot open file "~/.vmware/preferences": No such file or directory.

2019-04-18T06:48:14Z nfsgssd[66646]: lib/ssl: OpenSSL using FIPS_drbg for RAND

2019-04-18T06:48:14Z nfsgssd[66646]: lib/ssl: protocol list tls1.2

2019-04-18T06:48:14Z nfsgssd[66646]: lib/ssl: protocol list tls1.2 (openssl flags 0x17000000)

2019-04-18T06:48:14Z nfsgssd[66646]: lib/ssl: cipher list !aNULL:kECDH+AESGCM:ECDH+AESGCM:RSA+AESGCM:kECDH+AES:ECDH+AES:RSA+AES

2019-04-18T06:48:14Z nfsgssd[66646]: Empty epoch file

2019-04-18T06:48:14Z nfsgssd[66646]: Starting with epoch 1

2019-04-18T06:48:14Z nfsgssd[66646]: Connected to SunRPCGSS version 1.0

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: krb5

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: etc-hosts

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: nfs

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: nfs41

2019-04-18T06:48:14Z jumpstart[65945]: executing start plugin: mount-disk-fs

2019-04-18T06:48:15Z jumpstart[65970]: VmFileSystem: Automounted volume 5a6f6646-d13e2d89-fd8d-b499babd4e5e

2019-04-18T06:48:15Z jumpstart[65970]: VmFileSystem: Automounted volume 5ab363c3-c36e8e9f-8cfc-b499babd4e5e